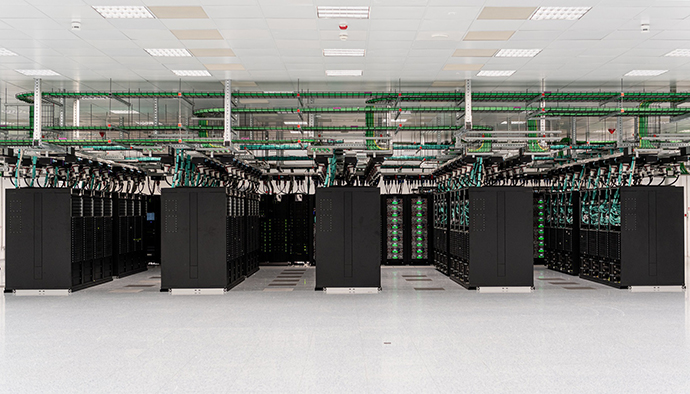

After an international tender, on 22 June 2017 representatives of ECMWF Member States approved the proposal by the Italian Government and the Emilia-Romagna region to host ECMWF’s new data centre in a refurbished former tobacco factory in Bologna, Italy. The Emilia-Romagna region provided the land, the existing building and the design, and it promised full delivery of an operational data centre as part of the regeneration and refurbishment of the former tobacco factory site. Following a tender process, the region appointed a consortium of Italian contractors, who started work on site in December 2018. By the end of 2021, all construction work had finished and a new Atos BullSequana XH2000 high-performance computing facility (HPCF) had been installed on the premises (for details on the HPCF, see Hawkins & Weger, 2020).

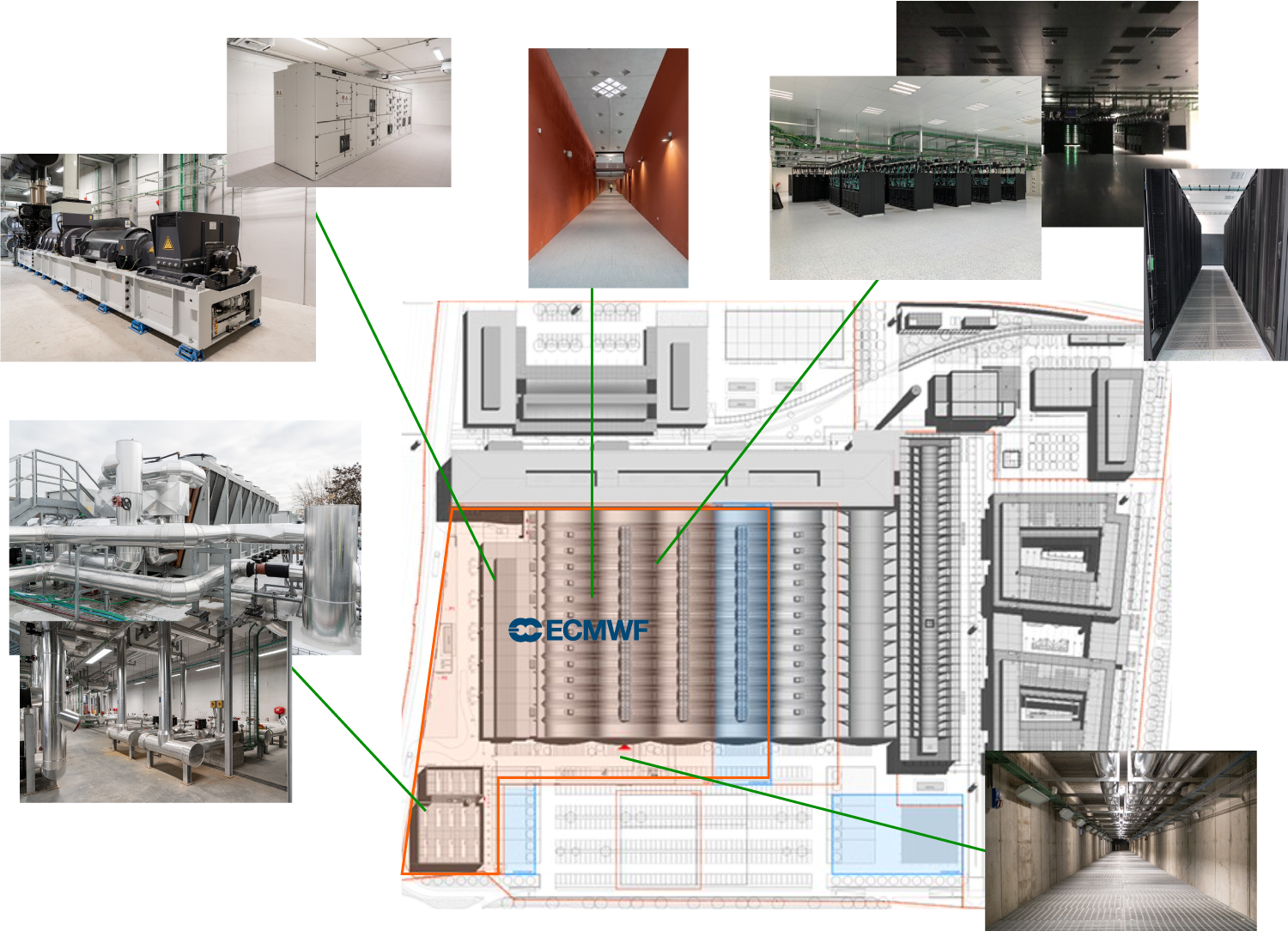

Construction work included refurbishment of the old buildings to house five diesel rotary uninterruptable power supplies and the electrical infrastructure as well as data halls and offices; erecting a new building, known as the L2 building, to house the chillers and pumps; digging two tunnels connecting this building to the main building; and constructing a new electrical sub-station. Towards the end of 2020, all the main infrastructure work to the data centre site had been completed and the building services and systems almost finalised despite the COVID‑19 pandemic. By the end of April 2021, most tests were completed, allowing ECMWF to start the deployment of the network and the installation and connection of servers.

In June 2021, partial handover from the region to ECMWF took place. This enabled the Centre to have control of systems and processes, ensuring managed resilience to the data centre, whilst ECMWF continued its installation of information and communications technology (ICT) systems and the start of the HPCF build in Bologna. During September, the data centre was formally opened by representatives of ECMWF and the Italian authorities.

Mechanical and electrical infrastructure

The electrical and mechanical systems have been designed to ensure resilience and reliability in accordance with ECMWF tender requirements and operational needs. Each system and each component are redundant, so that in the event of failures or maintenance activities, the power supply and cooling to the computer rooms is guaranteed.

As for electricity, the site utility supply allows for two fully rated electrical 10 MW circuits. Two points of delivery, a main one and a spare one, are powered from two different lines. They are connected within a closed loop to five transformer substations, which feed the five 2MW diesel rotary uninterruptible power supply (DRUPS) units. The outputs of the DRUPS are in turn connected to an ‘isolated ring’: in this way, the data centre has five power supply branches, and if one of them fails, the other four support the load of the site automatically. From the five DRUPS, through the critical distribution boards, busbars and cables, the energy reaches the computer rooms. Each element of ICT equipment is powered by at least two of the five branches except the HPCF racks, which are powered by three branches, giving extra resilience.

| Project meetings |

Monitoring meetings

|

Handover documents | Administrative acts | Regional laws |

National law

|

National Decree |

| 136 | 39 | 7,685 | 144 | 4 | 1 | 2 |

| Companies |

Workers per day – on average

|

Workers per day – peak |

Electrical cables

|

Concrete

|

Steel |

| 80 | 180 | 382 | 50 km | 48,000 tons | 2,000 tons |

The data centre cooling system relies on three sources, operating alternately and together, based on the season: geothermal wells, adiabatic dry coolers and chillers. To minimise the environmental impact and reduce operational costs to ECMWF, the system uses ground water to support the data centre cooling for part of the year. So far, the wells have been dug and the pumps installed, with final wiring and connections almost finalised. Geologists are assessing the wells’ performance before issuing ECMWF with the necessary licence to bring them online.

The site has two thermal power plants, with four chillers, two dry coolers and two geothermal well systems each, as well as a reserve chiller as a further degree of redundancy. The two mechanical plants, through two pumping stations and two underground tunnels, bring chilled water from the L2 building to the computer rooms. If needed, the plants can be connected, so that one also cools the thermal load of the other providing further flexibility.

To optimise energy efficiency and therefore reducing consumption, the system has three operating stages: during the winter season, only the adiabatic dry coolers will work. When the external temperatures become too high, the geothermal wells will start operating and will gradually take over the entire plant. In the hottest season, chillers will be used to respect the groundwater withdrawal limits of the Italian environmental authority and provide the cooling capacity for the data centre.

The infrastructure is equipped with security systems for early warning fire detection and extinguishing (both inert gas and water mist, based on the characteristics of the room being protected), access control, anti-intrusion system and security cameras.

Systems are monitored and managed via a Building Management System (BMS), which can monitor all the main components and provide periodic reports of consumption. Aspects of environmental sustainability have not been neglected, with the data centre having been certified at Platinum level according to LEED (Leadership in Energy and Environmental Design). LEED is a set of rating systems for the design, construction, operation and maintenance of green buildings. The certifications come as Silver, Gold and Platinum, and the highest Platinum certification indicates the highest level of environmentally responsible construction with efficient use of resources.

| Reading | Bologna | |

|---|---|---|

| Power available | 5.6 MW @ 430 V | 10 MW @ 15 kV |

| Total cooling capacity | 7,500 kW @ 42°C outdoor | 7,800 kW @ 45°C outdoor |

| DRUPS | 4 DRUPS 1400 kW each | 5 DRUPS, 2 MW each |

| Chillers | 10 chillers: 2x700 kW, 1x850 kW, 7x750 kW | 9 chillers: 865 kW each |

| Data halls | 1x1000 m2, 1x500 m2 | 2x1000 m2 |

| Data storage | 1x250 m2 | 2x560 m2 |

The Bologna Tecnopolo

The Tecnopolo former tobacco factory in Bologna is the most recent node of the Regional Network of technopoles, which also includes ten other places that host research, innovation and technology transfer laboratories. It covers an area of over 100,000 square metres entirely dedicated to science and technology and their applications. The conditions of use make particular reference to supercomputing and data and their use for advancing knowledge, the improvement of the lives of citizens, and the competitiveness of the economic system. Bologna Tecnopolo, closely connected with the academic system and Italian and European research, offers enough flexibility to meet future ECMWF expansion requirements.

In addition to the ECMWF data centre, the Tecnopolo will host from 2022 the pre-exascale EuroHPC supercomputer Leonardo managed by Cineca and the new Data Centre of the National Institute of Nuclear Physics (INFN), which needs a new location to process the huge amount of data from European experiments on nuclear physics.

The construction of the headquarters of the National Agency for New Technologies, Energy and Sustainable Economic Development, as well as that of the Competence Center Industry 4.0, dedicated to the use of Big Data in industry, are also planned at the Tecnopolo site.

Finally, a new building is being built specifically for international research centres in the fields of supercomputing, climate, Earth observation and human development that would like to have their headquarters at the Tecnopolo. These include an advanced stage of development of the project to host an office of the United Nations University (UNU) on Big Data and artificial intelligence for the management of human habitat change.

ECMWF’s new data centre in Bologna is the first project to be completed at the Tecnopolo site. It will soon be part of a broader structure at the forefront of technologies in supercomputing, Big Data and artificial intelligence (AI). This will support ECMWF on its path towards its long-term strategic goals.

ECMWF would like to thank the Italian government and the Emilia-Romagna region for their role in building the new data centre. A video on the Bologna Tecnopolo becoming a leading place for supercomputers, in Italian with English subtitles, is available here: https://www.youtube.com/watch?v=96TfXHCWxf8.

Further reading

Hawkins, M. & I. Weger, 2020: HPC2020 – ECMWF’s new High-Performance Computing Facility, ECMWF Newsletter No. 163, 32–38.