Experts meeting at ECMWF on 2 and 3 March 2016 discussed how best to assess the quality of weather forecasts in the future, including predictions of extreme events.

Checking how well ECMWF’s weather forecasts match observed outcomes is part of the daily work of scientists in the Centre’s Evaluation Section.

Verification results do not just tell them how well ECMWF is doing in its mission to provide high-quality global medium-range weather forecasts. They can also provide vital clues as to how the models that produce the forecasts can be improved.

“Verifying and diagnosing our forecasts is crucial because it drives improvements forward,” ECMWF Director-General Florence Rabier said at the start of the informal Workshop on Verification Measures.

However, getting forecast verification right can be a scientific challenge in its own right.

ECMWF Fellow Professor Tilmann Gneiting told the meeting that the public will often assess forecast performance by just looking at extreme events: did the forecast correctly predict a particular windstorm, heat wave or torrential downpour?

But if we only look at extreme events that have actually occurred when we measure forecast performance, we could go horribly wrong. A ‘silly’ forecast, such as predicting a severe windstorm every day, would gain high scores if we were to assess it just on days when there is a severe windstorm.

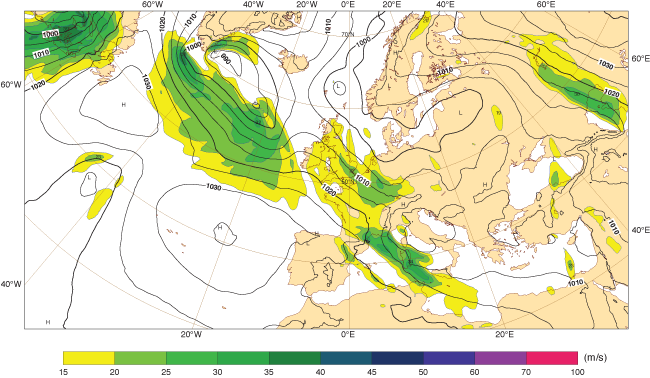

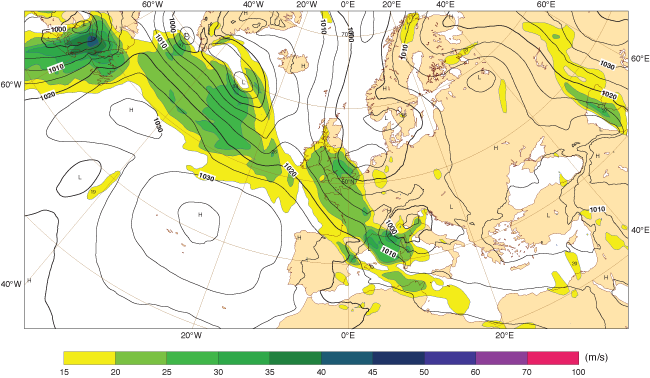

ECMWF’s five-day forecast of wind speeds at 850 hPa (about 1.5 km above sea level) for 00 UTC on 3 March 2016 (top) provided a good prediction of areas of high winds observed on the day, as shown in the corresponding wind analysis (bottom). But using just examples of very windy conditions to assess forecast performance can produce misleading results.

The ‘forecaster’s dilemma’

The public’s perception confronts forecasters with a dilemma, said Professor Gneiting, who leads the Computational Statistics Group at the Heidelberg Institute for Theoretical Studies in Germany: should they stick to their best forecast and risk public opprobrium, or should they adjust (or ‘hedge’) it to minimise such risk?

Fortunately, he presented a way out: while we must insist on including the full range of forecast cases in our assessment of forecast quality, we can give particular weight to extreme events (both observed and forecast) if that is what we are interested in.

In probabilistic forecasting, this can be done by including a weighting factor in the formula used to compute the Continuous Ranked Probability Score (CRPS).

The CRPS measures how closely the probability distributions of predicted weather events match observed outcomes. If properly weighted, the CRPS can serve as an objective test of how good a forecasting system is at predicting extreme events.

Improvement in skill

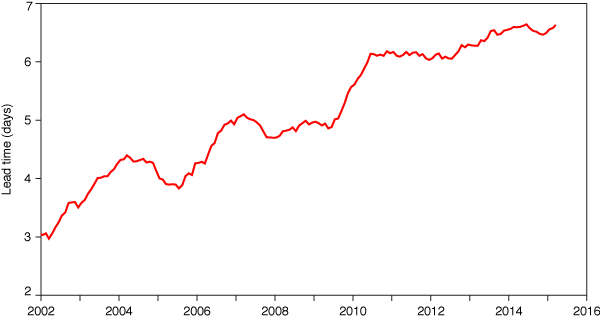

The Continuous Ranked Probability Skill Score (CRPSS) compares the CRPS of a forecast to that of a reference forecast. It can be used to monitor the long-term evolution of the skill of ensemble forecasts, which describe the range of possible weather scenarios and their likelihood of occurrence.

The chart shows the evolution of the skill of ECMWF’s ensemble forecasts in predicting 24-hour precipitation totals in the northern hemisphere extra-tropics. It shows that 12-month running average values of the forecast range at which the CRPSS drops below 0.1 have gone up from three days in 2002 to nearly seven days in 2015.

Part of the purpose of the workshop, which was attended by 27 scientists from ECMWF and its Member and Co-operating States, was to further develop the range of scores and metrics used at ECMWF to monitor forecast performance.

The goal is to adapt it to ECMWF’s future needs, such as the evaluation of forecast skill for high-impact weather at lead times of two to four weeks.

The topics discussed included the verification of high-impact weather, extended-range verification methods, and user-oriented verification.

“The discussions were very productive and covered a wide range of issues associated with the verification of numerical weather forecasts,” said Thomas Haiden, ECMWF’s Verification Group Team Leader.

“There was some emphasis on the evaluation of heat waves and cold spells in the extended range, and on questions of observation representativeness and upscaling,” he added.

“The outcome of the workshop will help us set priorities for future developments in verification as the complexity and scope of ECMWF’s forecasting system continues to grow.”