ECMWF’s high-performance computing facility (HPCF) is at the core of its operational and research activities and is upgraded on average every four or five years.

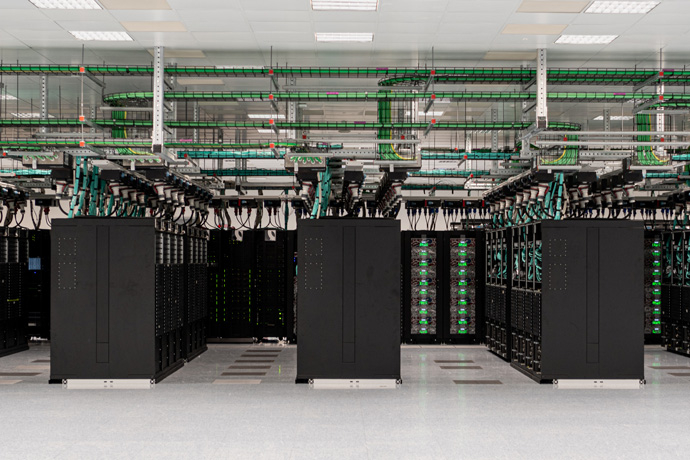

Following a competitive procurement, ECMWF entered into a service contract worth over 80 million euros with Atos for the supply of an HPCF based on its BullSequana XH2000 supercomputer technology. The HPCF is installed in ECMWF’s data centre in Bologna, Italy, from where it took over the production of operational forecasts on 18 October 2022.

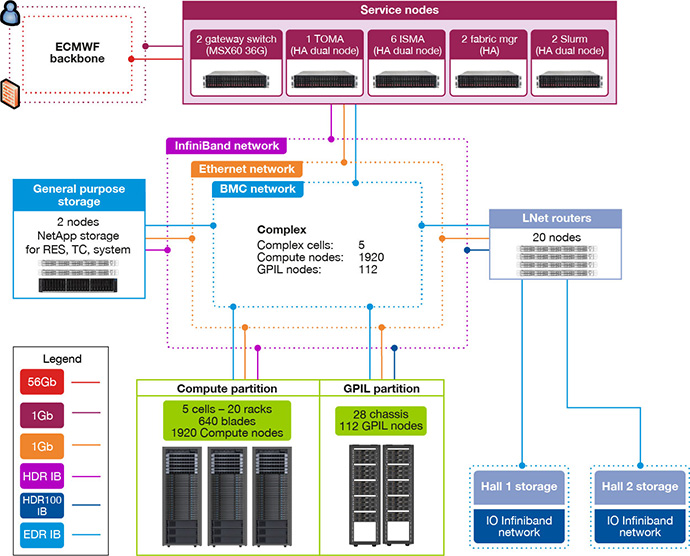

Atos Sequana XH2000 system configuration

The main system from Atos is made up of four self-sufficient clusters, also called ‘complexes’. Each cluster is connected to all the high-performance storage.

There are two type of nodes that run user workloads: ‘compute nodes’ for parallel jobs, and ‘GPIL nodes’ for general purpose and interactive workloads. Other nodes have special functions, such as managing the system, running the scheduler and connecting to the storage.

Overview of an HPC cluster. There are four of these in the system.

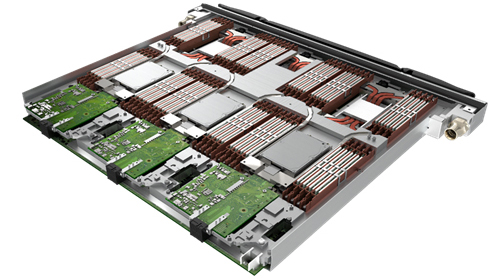

Sequana XH2000 AMD compute blade with three nodes.

The 7,680 compute nodes form the bulk of the system and are located in Bull Sequana XH2000 high-density racks. Each rack has 32 blades, with three dual socket nodes per blade, and uses direct liquid cooling to extract the heat from the processors and memory to a liquid-cooling loop in the rack. This cooling method allows the compute nodes to be densely packed and the number of racks to be minimised. A heat exchanger at the bottom of the rack connects to the building’s water cooling system. The cooling system in the rack allows water to come in at up to 40°C. This provides plenty of opportunity for cooling using just the outside air, without any need for energy-consuming chillers. This lowers the amount of electricity required by the system and improves overall efficiency.

The GPIL nodes run at a slightly higher frequency and have more memory per node than the compute nodes. The different node type allows Atos to include a 1 TB solid-state disk in each node for local high-performance storage. The racks with GPIL nodes are less densely populated than the compute node racks and can therefore use a simpler cooling infrastructure. Fans remove the heat from the GPIL nodes and blow the hot air out through the water-cooled radiator ‘rear-door’ exchanger. While this is a less efficient cooling method compared to direct liquid cooling, and while it cannot handle the same kind of heat load or cooling water temperature, the use of standard servers facilitates maintenance and is less costly.

| Cray XC40 | Atos Sequana XH2000 | |

|---|---|---|

| Clusters | 2 | 4 |

| Processor type | Intel Broadwell | AMD Epyc Rome |

| Cores |

18 cores/socket 36 cores/node |

64 cores/socket 128 cores/node |

| Base frequency | 2.10 GHz |

2.25 GHz (compute) 2.5 GHz (GPIL) |

| Memory/node | 128 GiB |

256 GiB (compute) 512 GiB (GPIL) |

| Total number of compute nodes | 7,020 | 7,680 |

| Total number of GPIL nodes | 208 | 448 |

| Total memory | 0.9 PiB | 2.1 PiB |

| Total number of cores | 260,208 | 1,040,384 |

High-performance storage

The Atos HPCF has a hierarchical storage design with two pools of solid-state disk (SSD) storage in addition to traditional disk storage pools. Each SSD pool is designed to hold data generated by the operational forecast suites for a couple of days. After this time, the suite moves the data to storage pools with higher capacity, but lower performance. The storage uses the Lustre parallel file system and is provided by DataDirect Networks (DDN) EXAScaler appliances.

Network

The Atos system uses a ‘High Data Rate’ (HDR) InfiniBand network produced by Mellanox. The HDR technology boosts application performance by keeping latencies (the time for a message to go from one node to another) down to less than a microsecond and enabling each cluster to have a bisection bandwidth of more than 300 terabits per second (corresponding to the simultaneous streaming of 37.5 million HD movies).

The compute nodes in a cluster are grouped into ‘Cells’ of four Sequana racks. Each cell has ‘leaf’ and ‘spine’ switches. Each compute node is connected to a leaf switch, and each leaf switch is connected to every spine switch in the cell, so that all the 384 nodes in a cell are connected in a non-blocking ‘fat-tree’ network. Each of the spine switches has a connection to the corresponding spine in every other cell, yielding a ‘full-bandwidth Dragonfly+’ topology. The GPIL nodes are connected to the high-performance interconnect so that they have full access to the high-performance parallel file system.

As well as the networking for the compute and storage traffic, network connections to the rest of ECMWF are needed. Gateway routers connect from the high-performance interconnect to the four independent networks in the ECMWF data centre network, enabling direct access from other machines to nodes in the HPC system.

Software environment

The ‘Atos Bull Supercomputing Suite’ is the Atos software suite for HPC environments. It provides a standard environment based on a recent Linux distribution (RedHat Linux RHEL 7) supported by Mellanox InfiniBand drivers, the Slurm scheduler from SchedMD, the Lustre parallel file system from DDN, and Intel compilers. In addition, PGI and AMD compiler suites and development tools are available.

For in-depth profiling and debugging, the ARM Forge product can be used. This software package includes a parallel debugger (DDT) and a performance analysis tool (MAP). The Lightweight Profiler (Atos LWP) is supplied as well. It provides global and per-process statistics. LWP comes with the Bull binding checker to allow users to confirm that the process binding they are using is as expected. This is an essential function to obtain better CPU performance with large core count, multi-core processors and hyperthreading.

Containerised software can be run through Slurm using standard commands. Atos provides a complete framework based on the Singularity software package as well as a Slurm plugin for submission and accounting, and a tool to help container creation by users.

| Component | Description |

|---|---|

| Operating system | Red Hat Enterprise Linux |

| Main compiler suite | Intel Parallel Studio XE Cluster Edition |

| Secondary compiler suites |

PGI compilers and development tools AMD AOCC compilers and development tools |

| Profiler/debug tool | ARM Forge Professional |

| Batch Scheduler | Slurm |