Peter Bauer, Deputy Director of Research and Programme Manager of the ECMWF Scalability Programme

As the head of ECMWF’s Scalability Programme, I will have a leading role at the ECMWF high-performance computing (HPC) workshop in September, where we will be discussing what is perhaps the biggest revolution in numerical weather prediction since computer-based forecasts began back in the 1950s. In the first of three blogs, I outline the challenges and how, through collaboration, we are rising to them.

Why is scalability the headline topic of the 2018 ECMWF HPC workshop?

Actually, scalability has been the key theme of previous ECMWF HPC workshops, but this year is special because we are approaching the completion of the first milestones of the ECMWF Scalability Programme and, with the upcoming procurement for our next supercomputer, new technologies offering better ways to achieve scalability become important.

The HPC workshop offers a unique forum for bringing together operational centres mostly concerned with running cost-effective forecasting systems on affordable HPC infrastructures, research teams exploring cutting-edge methodologies and novel technologies for future solutions, and HPC industry representatives interested in providing the most suitable technological solutions for an application community with enormous socio-economic impact: weather and climate prediction.

The workshop programme comprises programmatic overview talks, expert presentations and panel discussions focusing on key topics such as the European Roadmap towards Exascale and the Convergence of HPC and the Cloud, and separate showroom presentations on latest technology roadmaps provided by vendors.

Why does weather and climate prediction present such a challenging computing task?

Forecasts are based on tens of millions of observations made every day around the globe and physically based numerical models that represent processes acting on scales from hundreds of metres to thousands of kilometres in the atmosphere, the ocean, the land surface and the cryosphere. Forecast production and product dissemination to users is always time critical and forecast output data volumes already reach petabytes per week today.

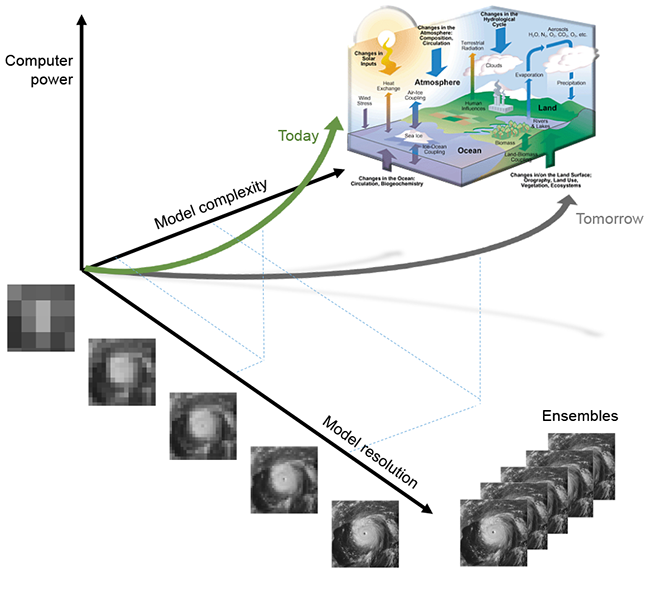

If we want to achieve a qualitative change of our models we need to run simulations at much, much finer resolutions than today, and – with a focus on enhanced prediction of environmental extremes – with much larger ensembles. Our data assimilation methods need to follow this trend to provide accurate initial conditions at such scales.

Meeting these requirements translates into at least 1000 times bigger high-performance computing and data management resources than today – towards what’s generally called ‘exascale’. However, the term ‘exascale’ can be misleading as it is often associated with exaFLOPS computing (1 exaFLOPS = 1018 floating point operations per second). But our current codes only reach 5% efficiency on supercomputers so that we are actually far away from true exascale computing. The Scalability Programme aims to overcome this hurdle.

Efforts are under way to limit the need for greater computer power as model complexity and model resolution grow.

What will future forecasting systems look like?

With the realisation that scalability is one of the key stepping stones for achieving better forecasts, the weather and climate prediction community is currently undergoing one of its biggest revolutions since the foundation of the underlying numerical techniques in the 1950s. This revolution encompasses a fundamental redesign of mathematical algorithms and numerical methods, the adaptation to new programming models, the implementation of dynamic and resilient process management and the efficient post-processing and handling of big data.

The trend away from centralised computing and data processing towards cloud computing will occupy a prominent role at this year’s workshop. Cloud computing implies a rethinking of entire workflows and the way forecasting centres share the work between research, operations and external services like Copernicus.

For institutions like ECMWF it is very challenging to redesign their entire forecasting systems while keeping the incremental evolution of the operational configurations alive. Historically, the latter used to claim all the available staff resources, even more so with the continual need for higher spatial resolution, more accurate initial conditions, more Earth system complexity and more reliable ensembles leading to enhanced predictive skill across scales from days to seasons – and maintaining world leadership in the medium range by the way!

Reinventing important components of the system and managing the hand-over from traditional to novel versions of these components at the same time is much, much more than a short-term research project – it requires a substantial commitment of an entire organisation along with sufficient funding.

How can the weather and climate community overcome these challenges?

Challenges of this dimension obviously require a substantial investment in defining the vision and implementation. The vision needs to take along the entire weather and climate community, and interface the scientific needs with appropriate technological solutions. The fundamental questions around model formulation, workflow design, programming models and domain-specific processors need to be properly addressed with a long-term view of how an efficient world-leading weather prediction centre should operate in 10–20 years time.

In the past, ECMWF approached this mostly with a focus on its own developments and this has doubtlessly led to both very efficient systems and skilful predictions. However, the sheer magnitude of the task, to redesign an entire forecasting system and achieve performance gains of 1000 or more, goes clearly beyond traditional thinking. It needs Member State organisations as much as support from academia, HPC centres and the HPC industry.

Of great help so far have been a number of projects funded by the European Commission supporting future and emerging technologies and E-Infrastructures. Already the first generation of projects like ESCAPE, NextGenIO and ESiWACE have produced significant results like:

- the first head-to-head comparison of present and future IFS model options on different processor types

- the assessment of full single-precision model runs and

- the invention of a realistic workload simulator for benchmarking.

The second generation of these projects such as ESCAPE-2, MAESTRO and EPiGRAM-HS will continue this legacy.

The European Commission and selected EU Member States are also developing their own vision towards future extreme-scale computing capabilities through the EuroHPC initiative. To be successful, this needs a strong push for the supporting software developments including the demonstration of the computational performance of key applications, like weather and climate prediction, on such computing infrastructures. The ECMWF HPC workshop will dedicate a special session to the current European project landscape and its suitability for advancing weather and climate prediction to what is needed in the 21st century. How this will unfold in the complex ecosystem of operational centres, academic research and the HPC industry will be interesting to watch.