Evaluating the quality of our forecasting system is important to ensure continued improvements and high-quality forecasts for Member and Co-operating States and other customers. David Richardson, Head of ECMWF’s Evaluation Section since 2013, gives an insight into the range of evaluation activities at the Centre, which rely on interaction across ECMWF and with forecast users in Member States and beyond. As he prepares to retire from ECMWF after a 35-year career in weather forecasting research and operations at both ECMWF and the Met Office, he also considers how evaluation will need to evolve to meet future requirements.

What is ‘evaluation’ at ECMWF?

Evaluation is the important and challenging task of assessing the quality of all ECMWF outputs. These include estimates of the current state of the Earth system, called analyses; forecasts (medium-range, sub-seasonal, seasonal); and data of past weather and climate, called reanalyses. A wide range of verification and diagnostics tools is needed, together with a broad understanding of ECMWF’s Integrated Forecasting System (IFS). Evaluation also includes the monitoring of the quality of all the observations received at ECMWF.

How is forecast quality assessed?

We have a set of eight headline scores that allow us to monitor the progress of IFS developments towards our strategic goals. These scores, developed in collaboration with the Technical Advisory Committee of our Member States, are used to evaluate the long-term trends in forecast performance. They cover the performance of the ensemble (ENS) and the high-resolution (HRES) forecasts, both upper-air fields and surface weather (temperature, precipitation, wind). They also monitor improvements in forecasts of tropical cyclone tracks. In addition, we routinely verify a much larger range of the forecast fields to ensure forecast quality is comprehensively monitored.

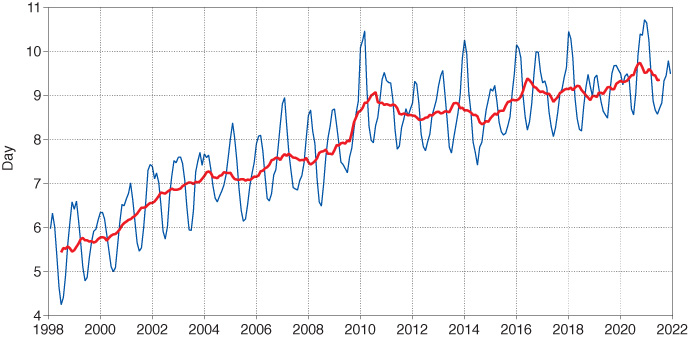

The chart shows the skill of ensemble forecasts (ENS) as measured by one of ECMWF’s primary headline scores. It shows the forecast lead-time at which the continuous ranked probability skill score (CRPSS) for ENS forecasts of 850 hPa temperature dropped below 25% (blue line: three-month mean; red line: 12-month mean).

We also routinely examine the forecasts in the same way that operational forecasters in our Member States will be using them, to understand how the quality of the forecasts will look from their perspectives, and particular errors are reviewed in our weekly weather discussion.

Alongside this daily activity, we conduct longer-term in-depth studies of key issues, including systematic model errors, to provide comprehensive understanding of strengths and weaknesses of the forecasting system.

How do you ensure users can make best use of ECMWF forecasts?

We provide a number of post-processed forecast products designed to help users to extract the most information, especially from the ensemble. The Extreme Forecast Index (EFI) is one example that is widely used in the Member States to alert forecasters to potential high-impact weather events. Other examples include precipitation-type probabilities, tropical cyclone strike probabilities, and extra-tropical cyclone products. We also produce an increasing range of environmental forecasts, including for hydrology and forest fire risk – ECMWF’s contribution to the Copernicus Emergency Management Service.

As well as the objective performance scores, we provide information about how the forecasts perform in different situations (‘Known forecasting issues’) and in certain severe events (the ‘Severe event catalogue’), as well as a comprehensive Forecast User Guide.

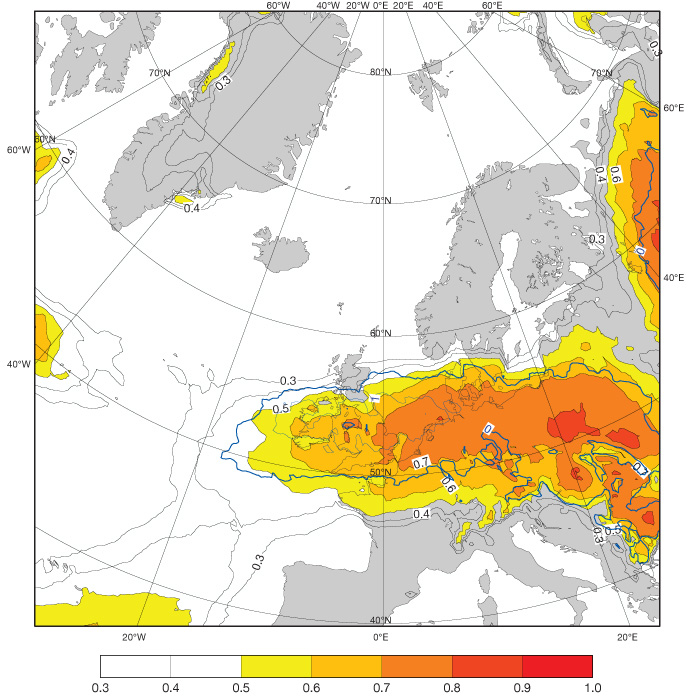

The plots show the Extreme Forecast Index (EFI; shading) and Shift of Tails (SOT; blue contours) for 24-hour maximum wind gusts on 18 February 2022 in forecasts from 00 UTC on 14 February (top) and 24-hour maximum wind gusts observed on 18 February (bottom).

How are performance issues resolved?

An important aspect of evaluation is to identify weaknesses in the current system, to understand as far as possible the cause of these, and to feed back this information to model developers. Feedback from forecast users is an important contribution to this, and the strong relationships we have with our users are key to making sure we benefit from their experience and detailed practical understanding of the performance of our forecasts in their own regions of interest. We also work closely with the model developers to evaluate upgrades to the forecasting system.

The operational observation monitoring identifies any issues with the availability or quality of observations that ECMWF uses to initialise its forecasts. Quick identification of issues allows problems to be reported to the observation provider to rectify. In the meantime, if necessary, the relevant observations can be excluded from the assimilation system so they do not adversely affect ECMWF’s analysis and subsequent forecast.

What are the most enjoyable parts of your role as Head of Evaluation?

The Evaluation Section covers a broad range of activities and has strong interaction across ECMWF and with our forecast users in Member States and beyond. It means I get a great overview of how all the different activities at ECMWF come together to make our forecasts, and I also get to see directly how these are used in our Member States.

Visits to our Member State weather services are a great opportunity to see our forecasts used in practice and to get very direct and specific feedback on the performance – what’s good, where we need to improve, and where we can do more to help users get the maximum benefit from what we provide.

ECMWF training courses and user meetings provide opportunities to meet and discuss directly with users.

I have a fantastic group of highly skilled scientists. The most important and rewarding part of my job is to provide the support and leadership they need to empower them to do what they do best – state-of-the-art science and efficient technical implementation to deliver the best, quality-assured forecast outputs that meet the needs and expectations of our forecast users.

What has changed over the years?

The ECMWF forecasting system has become more complex, moving towards a full Earth system model including atmosphere, land, ocean and cryosphere components. We have moved from initially just making forecasts for ten days ahead to covering all timescales, from days, to weeks, to seasons ahead.

In evaluation, one change has been the addition of more headline scores. Another is a more efficient and automated approach to observation monitoring, which has enabled us to monitor additional components of the observing system, including the land surface, oceans and cryosphere.

The introduction of environmental forecasting (floods and fire) has expanded the range of forecasting activities, introducing a new group of users. We have extended the evaluation to cover these new forecast products. As well as the direct benefit of showing the quality of the environmental forecasts, their evaluation can also contribute to our understanding of the performance of the IFS.

What does the future hold for evaluation?

ECMWF’s Strategy focuses on the development of seamless Earth system simulations with a particular focus on forecasting high-impact weather events up to a few weeks ahead. We need to deliver high-quality products to meet the needs of our users. Our evaluation must continually evolve to meet these requirements.

We will move further towards a comprehensive and integrated evaluation of all components of the Earth system. We have already seen the benefits this can bring to understanding the behaviour of the Earth system model. As the forecasting system moves towards ever-higher spatial resolution, we will need to develop new evaluation metrics to better understand scale-dependent aspects of forecast performance. Moving towards kilometre-scale resolutions will also introduce new challenges for forecast products and post-processing. In both verification and forecast products, Member States already have valuable experience in very-high-resolution forecasting, and close collaboration with experts in Member States will ensure we can deliver the best possible outputs.

Diagnostics will need to be developed to understand how the different components of the model work together. There will be an increasing focus on how predictability varies in different flow types.

The ever-increasing range of observations and the addition of new components of the Earth system into the IFS puts constant pressure on the evaluation and monitoring work. We need to become even more efficient and introduce more automatic monitoring where we can. Machine learning is one potentially valuable tool that may help to achieve this. It may help in identifying issues in the observing system, or to systematically analyse verification results to identify conditional biases in the forecasts, as well as to develop innovative post-processing of the basic model output fields to provide more tailored and case-specific outputs.

ECMWF is currently recruiting for the role of Head of Evaluation (application deadline 31 March 2022). For details, see our Jobs page.