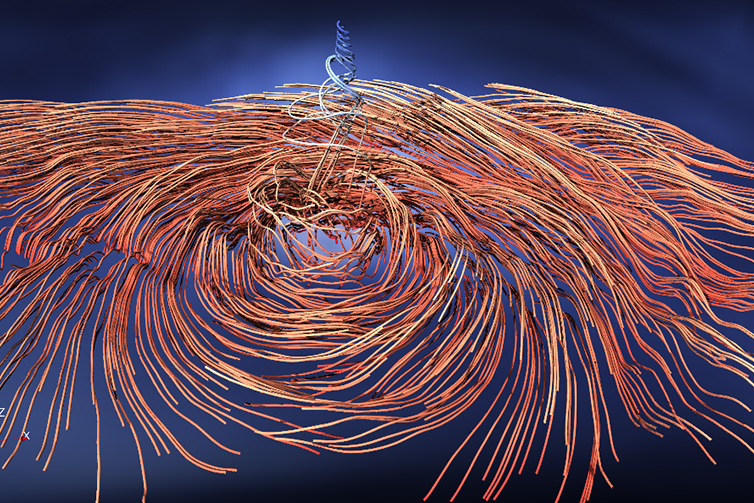

Visualisation of Hurricane Irma, which formed in the Atlantic on 30 August 2017, based on ECMWF’s high-resolution forecasts and produced using NVIDIA visualisation containers. (Image courtesy of Peter Messmer, NVIDIA)

Radical changes to prepare weather forecasting systems for the exascale era of supercomputing are under way. Now there are plans for a major international project to help complete this revolution.

A revolution in the making

Weather prediction is a high-performance computing application with outstanding societal and economic impact. It supports decision-making by citizens and emergency response services and has applications in sectors ranging from hydrology, food, agriculture and energy to insurance and health. Global climate change is likely to change weather patterns, including the frequency and severity of extremes, such as wind storms, floods, heat waves and droughts.

Forecasts are based on millions of observations made every day around the globe, and on physically based numerical models. The models represent processes acting on scales from hundreds of metres to thousands of kilometres in the atmosphere, the ocean, the land surface and the cryosphere. Forecast production and product dissemination to users are always time critical, and data volumes already reach petabytes each week.

Meeting society’s need for improved forecasts delivered in a timely manner will require an increase in high-performance computing and data management resources by a factor of 100 to 1,000 – towards what is generally called the ‘exascale’. To make this possible, weather and climate prediction is undergoing one of its biggest revolutions since its beginnings in the early 20th century. This revolution encompasses a fundamental redesign of mathematical algorithms and numerical methods, the adaptation to new programming models, the implementation of dynamic and resilient workflows and the efficient post-processing and handling of big data.

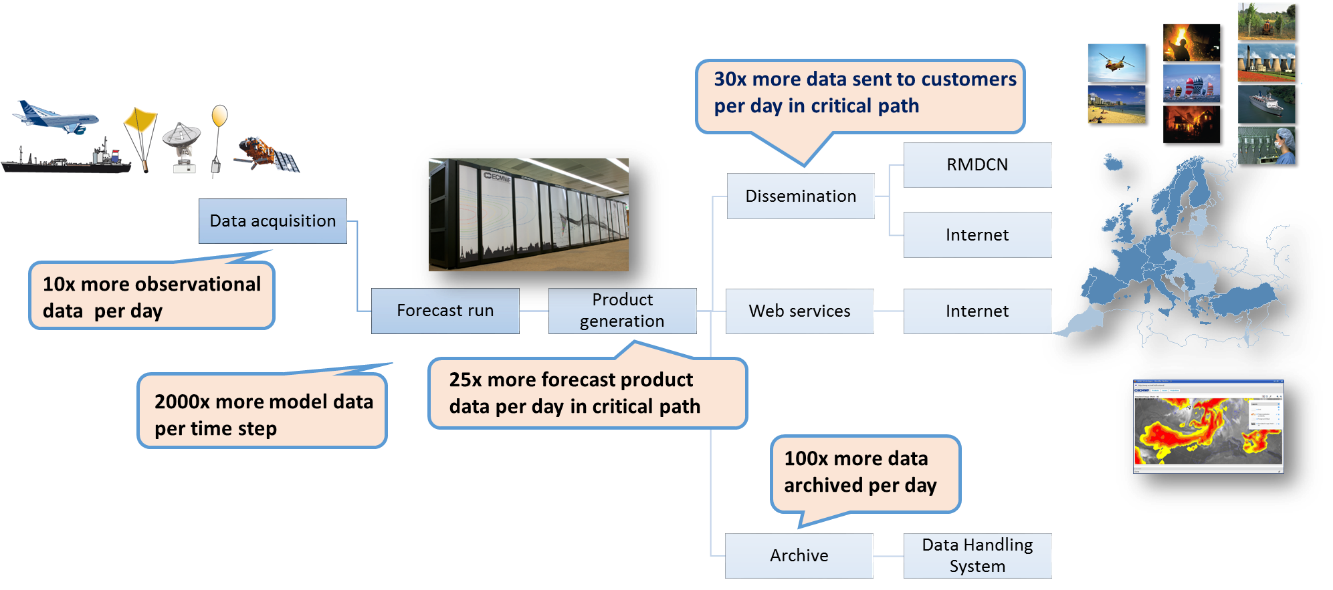

The next ten years are expected to see a dramatic increase in data volume across the ECMWF forecast production chain.

The weather and climate communities are facing up to the need for radical change, but two big questions arise. First, what does it really take to produce a viable solution for operational centres within, say, five to ten years? Second, are operational centres technically and financially equipped to meet this ‘scalability’ challenge?

What does it take?

Regarding the first question, one needs to realize the difficulty of redesigning entire forecasting systems while continuing to incrementally enhance the operational configuration. At ECMWF, the latter historically used to claim all available staff resources. Incremental improvements will continue to be of crucial importance to meet the need for higher spatial resolution, more accurate initial conditions, more Earth-system complexity and more reliable ensemble forecasts leading to enhanced predictive skill across scales from days to seasons.

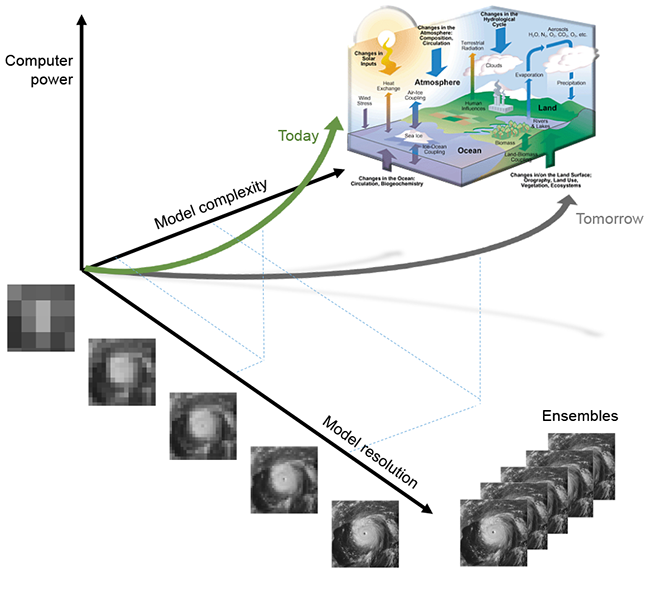

Efforts are under way to limit the need for greater computer power as model complexity and model resolution grow.

Reinventing important components of the system while at the same time managing the handover from traditional to novel versions of these components is much, much more than a short-term research project – it requires a substantial commitment of an entire organisation along with sufficient funding.

The recent experience from individual projects in ECMWF’s Scalability Programme clearly shows that significant steps towards a much more efficient, scalable and resilient code can only be made by questioning everything. Two examples of what ‘everything’ means:

Example 1: The forecast model calculates tendencies for key meteorological variables from resolved processes, such as large-scale motions, and from unresolved processes, such as convection, clouds and radiation. Among the latter, cloud and radiation schemes are computationally very expensive. An idea which emerged more than ten years ago, and which has resurfaced recently, is to replace some of these schemes with approximate, artificial-intelligence-type methods. They would be significantly faster but less accurate. Given many unknowns in the current schemes, good statistical performance in ensemble forecasts may be achievable. However, these methods would have to be retrained every time the model changes, i.e. potentially with every model cycle. The trade-off between cost and accuracy will show whether this option is feasible. If such methods were implemented they would benefit from the huge investment of computing companies like NVIDIA in processor technology that suits the training of such schemes particularly well.

Example 2: ECMWF currently runs its main computing and data handling tasks on two identical clusters, which guarantees resilience and requires only a single stack of system software designed for a single type of processor architecture. With the apparent stagnation of classic processor performance and the emergence of very different architectures, this monolithic approach will no longer be sustainable. It is very likely that specialized processors will be used for selected tasks where time- and energy-to-solution are critical. This also implies much more variety in system software, programming models and even scientific solutions, because some numerical algorithms perform particularly well on certain processors.

The same applies to data handling, which also involves many mathematical operations. The key word here is ‘flexibility’ in both scientific and technical options. This is the opposite of the traditional approach, in which hard technical choices are made based on seemingly optimal scientific solutions. The downside is a much greater initial development effort to create this flexibility. It may also become harder to maintain the system as the software infrastructure will have to deal with many more software layers and asynchronously updated generations of different hardware types.

ECMWF currently runs two Cray XC40 clusters in a resilient configuration.

These examples only address selected parts of the forecasting system but illustrate the scrutiny that needs to be applied. To fully address the efficiency challenge, we need to look at every component that might stand in the way of processing our predictions in an affordable manner in 10 or 20 years’ time.

In the past, ECMWF used to approach this mostly with a focus on in-house developments. This has without doubt led to both very efficient systems and very skilful predictions. However, the sheer magnitude of the task to redesign an entire forecasting system to achieve performance gains of 100 or 1,000 goes clearly beyond traditional thinking. It also exceeds the framework of ECMWF’s expertise and financial resources.

How well equipped are we?

With a holistic view on this challenge, ECMWF launched its Scalability Programme in 2013 with strong support from its Member States. Projects initiated as part of the programme cover all current forecasting system steps, from observational data pre-processing, data assimilation and modelling to model output data post-processing and key code developments in view of existing and future computer architectures.

We had realised already then that this effort required the strong involvement of our Member and Co-operating States in two areas. One is governance, because many developments here are being shared with similar ones at national hydro-meteorological centres, so there is a need for coordination. The other is real, collaborative, hands-on research. The Scalability Programme has already achieved a new mindset: in everything we do, computational efficiency is a major consideration, and it may actually drive scientific choices.

A number of externally funded projects have been of great help because they require strict coordination and provide additional resources to perform advanced research. Until now, these funds have been drawn from European Commission programmes supporting Future and Emerging Technologies and e-infrastructures. Already the first generation of projects such as ESCAPE, NEXTGenIO and ESiWACE have produced measurable results, such as the first head-to-head comparison of present and future model options on different processor types, the assessment of full single-precision model runs, and the invention of a realistic workload simulator for benchmarking.

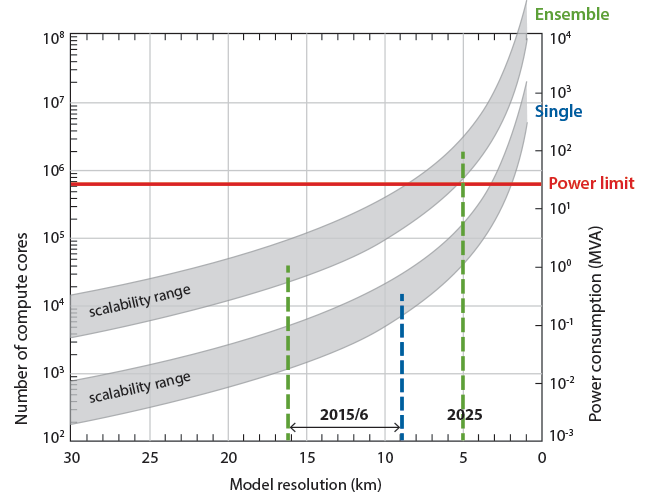

The chart indicates that ECMWF’s ability to continue to increase the resolution of its forecasts, while keeping power consumption within sustainable limits, depends very much on the success of the Scalability Programme. The number of required compute cores with traditional technology, and hence the amount of power consumed, rises rapidly as the resolution of single ‘deterministic’ forecasts and of ensemble forecasts increases.

While this funding is very welcome, it is rather difficult to sustain and to channel into the precise directions of research that ECMWF and its Member and Co-operating States require. An opportunity to move beyond this patchwork-type approach may arise from a recent initiative called Flagships, again originating from the Future and Emerging Technologies programme of the European Commission. Flagships are “large-scale, multidisciplinary research initiatives built around a visionary unifying goal that can only be realised through a federated and sustained effort”.

Projects of this dimension require a substantial preparatory investment to define the vision. In the field of numerical weather prediction, the entire weather and climate community needs to be involved, and the project needs to interface scientific needs with appropriate technological solutions. Ideally, true co-design is realised that will produce the best possible science-technology pairing for weather and climate prediction. The fundamental questions around model formulation, workflow design, programming models and domain-specific processors that are already being investigated in the Scalability Programme could be properly addressed with a long-term view.

The outcome would clearly be a breakthrough for our community because we already know that much-enhanced predictive skill will come from big cost drivers, such as better resolution, more Earth system process detail and larger ensembles, in particular with a focus on the prediction of extremes in the context of climate change.

A Flagship proposal

Given this unique opportunity, ECMWF is developing a preparatory action proposal for a Flagship with strong involvement from the weather and climate prediction community as well as ECMWF Member and Co-operating States, but also including expertise from the food, water, energy, health, geophysics and financial risk management sectors. The main deliverable would be game-changing modelling, computing, data handling and service capabilities producing substantial socio-economic impact because they would prepare the European service infrastructure for the next few decades. The project would be complemented by our community’s excellent collaboration with computing vendors and could lead to new Europe-based technology.

It is clear that we, as an international community, need to address the computing and data challenge one way or another. Only large-scale collaboration will be able to produce results in a cost-effective way by the time much more efficient forecasting systems are needed in operations. Our community is quite unique in terms of collaboration and sharing, and it would be surprising if we were to fail to achieve what is needed.

For more information, please visit the websites of international Scalability Programme projects in which ECMWF is involved: ESCAPE, NEXTGenIO, ESiWACE and EuroEXA.